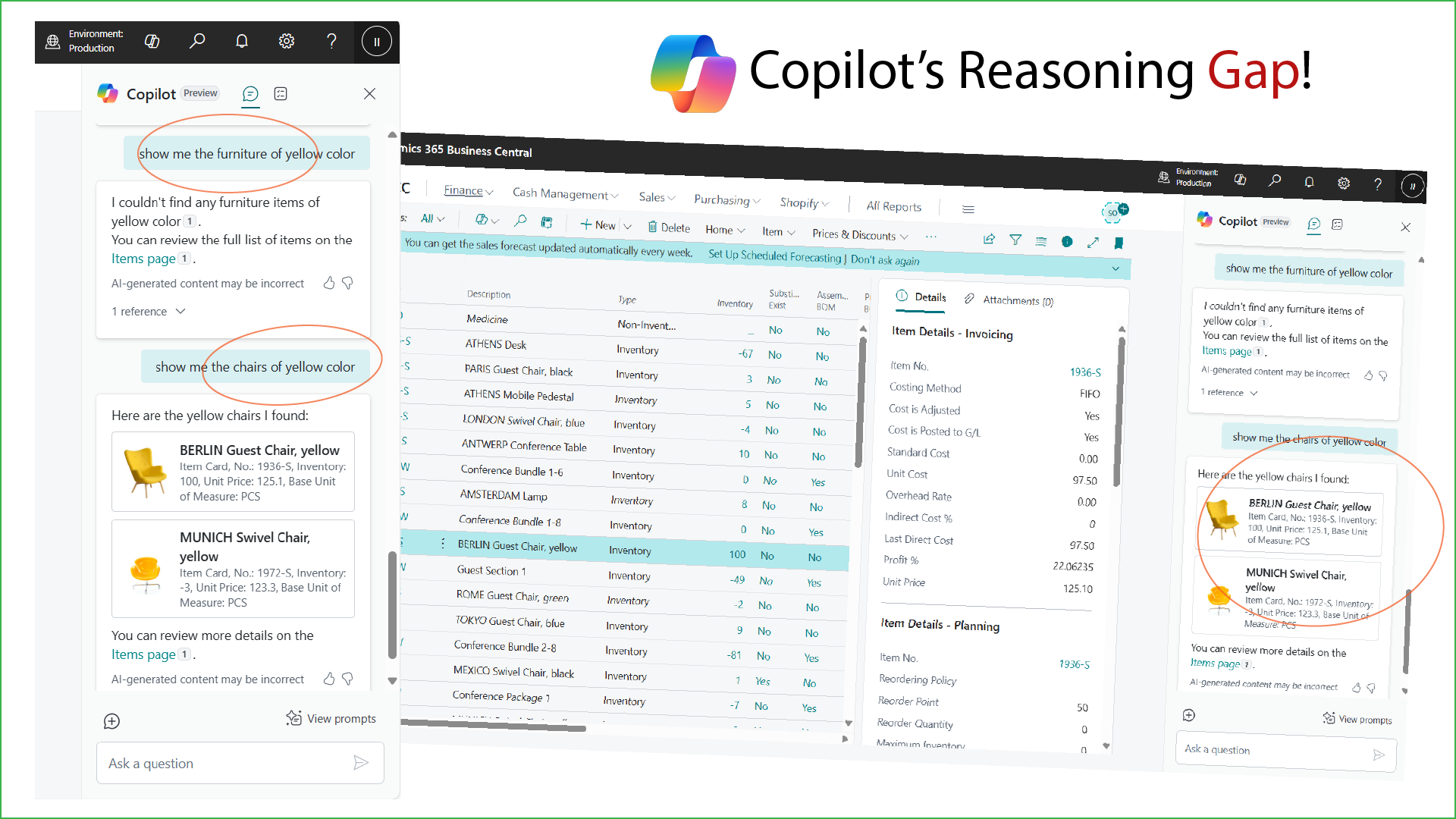

Microsoft Copilot—powered by Azure OpenAI and widely praised for bringing GPT-style reasoning to Dynamics 365 Business Central—recently failed a deceptively simple test: it returned no yellow furniture even though two yellow chairs clearly existed in the item master. This insight unpacks why that happened, what it means for enterprise AI trust, and how Microsoft (and all of us) can close the “common-sense gap” before it erodes confidence in otherwise brilliant copilots.

The Experiment

| Prompt | Copilot’s Response |

| “Show me the furniture of yellow color.” | “I couldn’t find any furniture items of yellow color.” |

| “Show me the chairs of yellow color.” | Returns two yellow chairs (Berlin Guest Chair, Munich Swivel Chair). |

Observation: Copilot recognises chairs when asked directly but fails to infer that chairs are a subset of furniture.

Why Smart AI Made a Basic Mistake

+ Schema-Bound Filtering

Business Central tables likely tag each item with a specific product group (“Chair”) rather than a parent category (“Furniture”). Copilot’s query engine obeys the literal schema—no parent mapping, no result.

Missing Ontological Inheritance

+ GPT can reason hierarchically, but Copilot’s wrapper supplies a narrow prompt and dataset. Without an explicit “chair → is-a → furniture” relationship, the model sees two unrelated terms.

+ Guardrails over Flexibility

Enterprise copilots prioritise deterministic answers and data-quality rules. That safety net sometimes strips away GPT’s broader reasoning capacity.

Risks: Trust, Adoption & Decision Quality

| Risk | Impact |

| Eroded User Trust | Users start double-checking or abandoning Copilot suggestions. |

| Hidden Data Blind Spots | Critical analytics could miss valid records grouped under child categories. |

| Perception vs. Reality | Headlines may claim “AI gets basics wrong,” overshadowing genuine Copilot strengths. |

A Roadmap to “Reasonable AI” in the ERP Stack

Enrich the Business Ontology

- Implement parent–child category tables (e.g., Chair → Furniture).

- Surface those relationships to Copilot via the semantic layer.

Prompt Engineering Enhancements

- Add fallback logic: “If no parent results, search child categories.”

- Encourage Copilot to ask clarifying questions—“Do you want me to include chairs?”

User-Facing Transparency

- Display the filter Copilot applied (“Category = Furniture”) so users spot gaps instantly.

- Offer an inline “Expand search” toggle.

Continuous Feedback Loop

- Collect edge-case fails (like this one) as test cases in Microsoft’s Copilot evaluation suite.

- Reward community bug reports with visibility credits or badges—turn customers into co-innovators.

Why This Matters Beyond One Yellow Chair

“Small reasoning errors today can snowball into large financial or compliance errors tomorrow”

As enterprises shift from static dashboards to real-time, AI-driven decisions, logical completeness becomes as critical as accuracy. A supply-chain planner or financial controller trusting Copilot must know that “furniture” truly means every relevant item—no silent exclusions.

Conclusion

Microsoft Copilot already transforms productivity, yet this episode reminds us that AI brilliance still needs common sense baked into its data structures and prompts. By addressing hierarchical reasoning now, Microsoft—and the broader AI community—can ensure that future copilots never miss the obvious, whether it’s a yellow chair, a sub-ledger entry, or a critical compliance exception.

Call to Action: If you discover similar gaps, document them and share feedback. Incremental, community-driven corrections will shape a more reliable Copilot for every business user.

Published by m.h. – 27/06/2025 :: 12:30AM | Uttara, Dhaka

Leave a Reply